Digitizing Facial Movement During Singing : Prototype

We will be using a three-part apparatus for tracking facial movements during simple singing: a barometric pressure sensor to monitor airflow, electromyography (EMGs) to monitor extrinsic facial muscles and a camera to track facial movement. While this data feeds in we will be manually recording fluctuations at different frequencies. Specially, the aim is to record changes in pressure, muscle contractions and position for each note in one octave—comparing to the face’s resting state. Ideally, data mining will be performed on at least five professional vocalists.

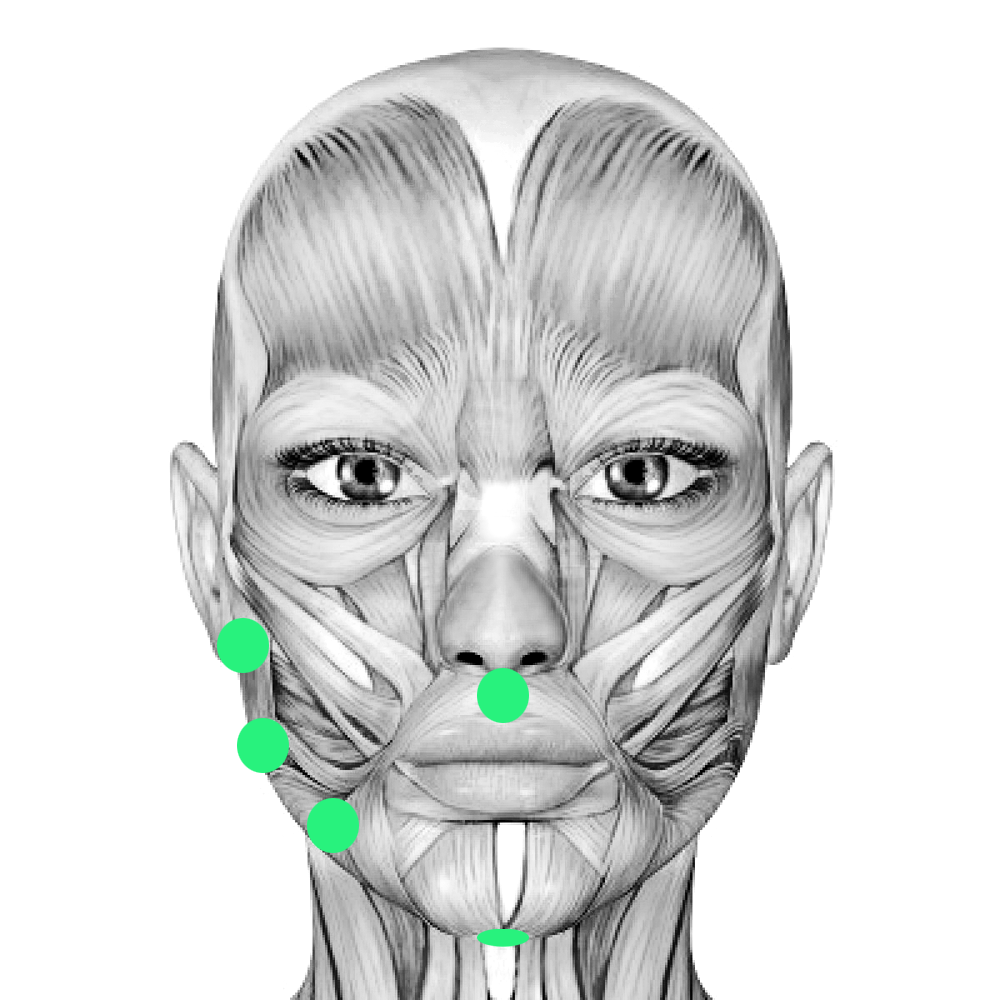

The barometric pressure sensor will be placed in front of the text subject, discretely mounted to a tiny stand. The EMGs will be placed (via medical adhesive) to the extrinsic muscles of the larynx involved in articulation; relevant muscles include the orbicularis oris (lips), geniohyoid (tongue and lower jaw), mylohyoid (mandible), masseter (cheek and jaw) and the hyoglossus (tongue). And finally, facial movement and geometry will be monitored using a Kinect.

Digitizing Facial Movement During Singing : Sensor Assembly

Pressure Sensor Circuit Diagram:

[IMAGE]

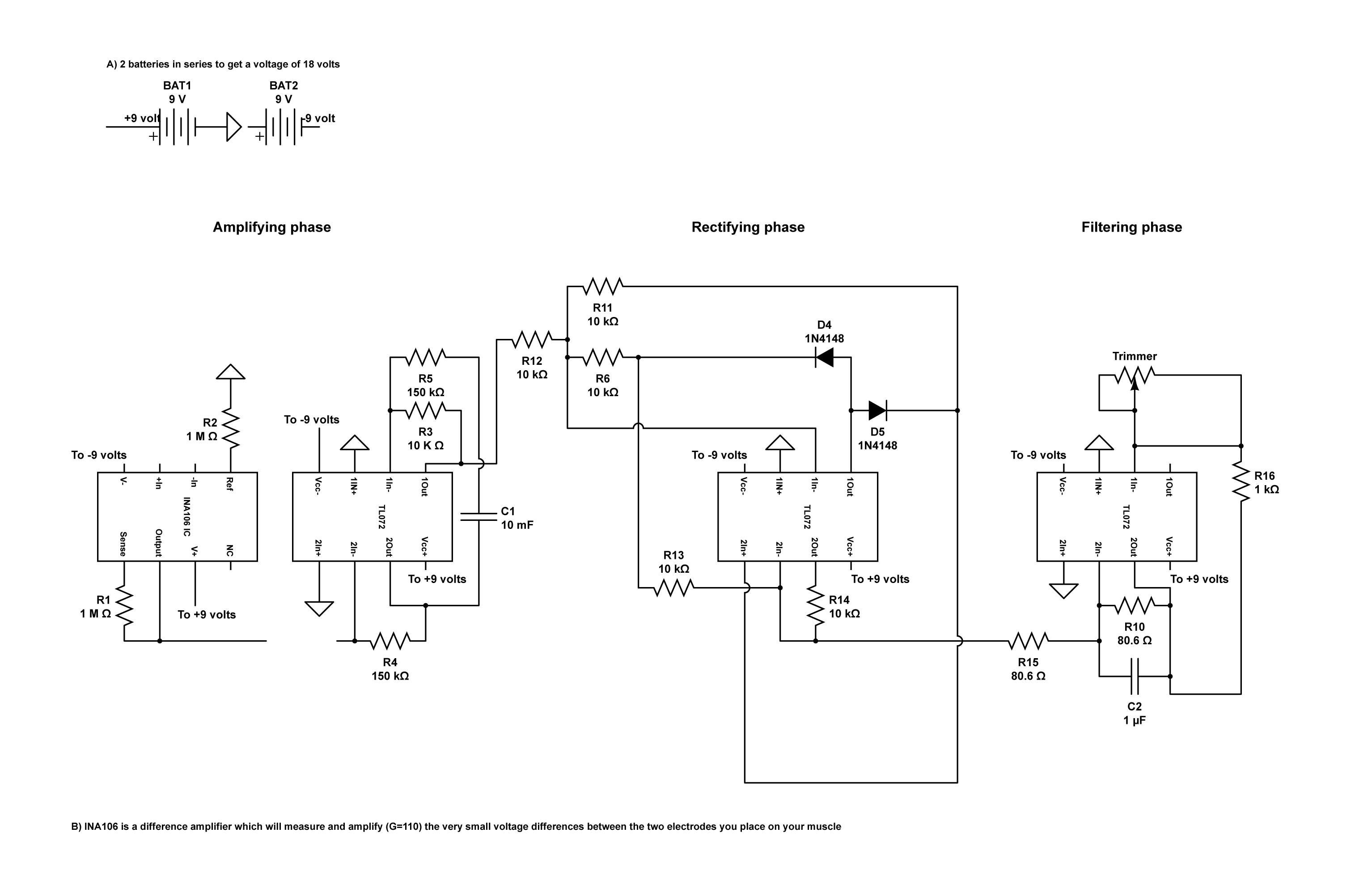

DIY Electromyography Circuit Diagram & EMG Map:

FaceOSC/Syphon/Processing Source Code:

import codeanticode.syphon.*;

import oscP5.*;

PGraphics canvas;

SyphonClient client;

OscP5 oscP5;

Face face = new Face();

public void setup() {

size(640, 480, P3D);

println(“Available Syphon servers:”);

println(SyphonClient.listServers());

client = new SyphonClient(this, “FaceOSC”);

oscP5 = new OscP5(this, 8338);

}

public void draw() {

background(255);

if(client.available()) {

canvas = client.getGraphics(canvas);

image(canvas, 0, 0, width, height);

}

print(face.toString());

}

}

void oscEvent(OscMessage m) {

face.parseOSC(m);

}

+ LINK to the Face class.

Digitizing Facial Movement During Singing : Troubleshooting

Currently, we’ve only run real tests on the FaceOSC/Syphon/Processing facial geometry detector. We are able to mine the following data (seen in the above screen capture):

pose

scale: 3.8911576

position: [ 331.74725, 153.13004, 0.0 ]

orientation: [ 0.107623726, -0.06095604, 0.085640974 ]

gesture

mouth: 14.871553 4.777506

eye: 2.649438 2.6013117

eyebrow: 7.41446 7.520543

jaw: 24.912415

nostrils: 5.7812777

Considering that we’re using the on-camera feed to track geometric displacement in the face (relating to muscle movement), only the data in the “gesture” category really applies—categories with two values are recording x and y fluctuation. Fortunately, scale, position and orientation do not bias the gesture readings. Unfortunately, there is a fair bit of natural fluctuation in the readings. We’ve gathered that fluctuations of over 0.5 are actual movement while those under 0.5 are natural oscillations in the camera’s reading.

Digitizing Facial Movement During Singing : Next Steps

With that established, our current plan of action is as follows: physical assembly of the remaining two parts in the three-part testing apparatus, testing and continued troubleshooting, data recording, pinpointing connections in data (relationship between movement/muscle/airflow and pitch), and then finally, apply the found data relationships to an application that generates sound via our sensing apparatus.