Design

Interaction space & hardware

In working through the nitty gritty of extracting the biomechanics of expressivity from Kinect data, I realized that I had lost sight of a primary design goal — processing the gestural activity of two people with one another rather than a single individual interacting with a gesture sensing technology. So I ran an experiment to supplement my work from the previous week.

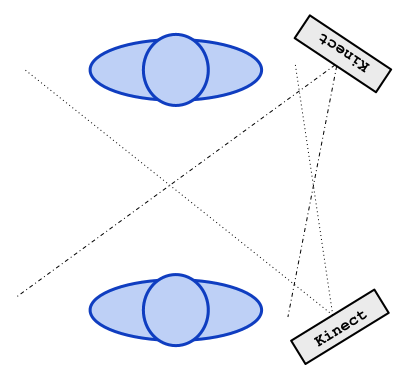

I arranged multiple Kinects and two gesturing individuals as depicted in the diagram to the right. The infrared projectors & cameras did cause some limited amount of interference with one another. However, the results using my prototype software were promising. The cameras were located in the periphery of the interaction space decidedly out of the way of the two individuals. Yet, they still yielded good results.

This, then, as depicted is my final design for the interaction space. Because of the limitations of generating skeleton tracking for more than a single Kinect, the cameras will need to be connected to separate computers and communicate over a network connection to a central software process. While this is an additional detail of complexity, the implementation is fairly trivial to implement — especially as I have code already available for this purpose.

Visualization

My ultimate goal is to generate the actual expressivity vectors for each of the two individuals interacting. Generating two vectors does not provide much to look at it by itself. While these could be used as input to any number of applications (games, environmental control, crazy video or audio processing, etc.), it’s unlikely I’ll have time to construct such an application. However, raw numbers at a console prompt are not particularly meaningful either.

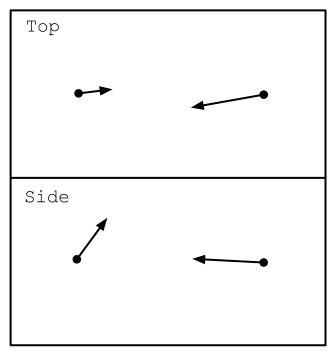

The mockup to the right illustrates a very simple approach to plotting and grokking the expressivity vectors of two interacting individuals. As experienced with my first prototype, only when the data is seen in motion does it begin to build meaning for the viewer.

Use

To demonstrate the final system, my concept is simple. Borrowing a page from the social science play book, I have in mind a simple demonstration of two individuals interacting with a “moderator” who very simply prompts topics of discussion for those individuals. The display of their expressivity is meant only for an “audience” engaged in a technology-enhanced form of people watching.

Possible Enhancements

Should time permit, one or more of the following could be incorporated into the final design.

Vibration to reduce interference

A simple scheme of vibration applied to Kinect cameras has been conclusively demonstrated to ameliorate the interference of multiple cameras in the same space. As I need the vibration rigs for another project and nearly all of the necessary hardware has arrived, I might endeavor to construct the vibration mounts and driver circuits to improve skeletal tracking.

Seated or standing mode

While the seated mode of the Kinect is noticeably more noisy than the standard mode, the complication of offering both is not great.

“Heat” of expressivity over time

I am considering adding a color element to the visual display (as depicted above) that would demonstrate a historical picture of the expressivity of either or both of the interacting individuals. That is, perhaps a color scale extends from blue to red and represents expressivity over a sliding window of time.

End Goals

Open source code

I plan to make the C# code I am writing available under an MIT license (one of the most open) through my github account. Using good technique, I am already writing code that separates calculation, data structures, visualization, etc. Thus, someone else making use of the code should have low hurdles to clear in incorporating into another project. C# is quite similar in structure to Java; in fact, I’ve easily ported open source Java libraries to C#. As Processing is based in Java, incorporating my code into Processing for ITP students should be relatively easy at some future date.

Informal test of correlation to perceived expressivity

As part of demonstrating my final system, I intend to construct a simple informal survey to query the perceived correlation between the measures of expressivity my system will generate and the human perception of expressivity happening in front of the audience. If this will require IRB approval, I may need to skip it and simply rely on comments made and impressions offered during the demonstration.

Progress Update

As already noted, I conducted experimentation with my planned interaction space and an arrangement of two Kinect cameras. I am presently reviewing the Anthropometry chapter in David Winters’ Biomechanics and Motor Control of Human Movement while working to remember my statics and solid mechanics undergraduate coursework so as to generate meaningful models of expressivity in the kinetic chain of an upper body.