Presentation: LINK

Biomechanics and Baseball

Meanwhile in Canada …

In celebration of RA Dickey’s addition to the Toronto Blue Jays and the Jay’s home opener this week, Discovery Canada show Daily Planet motion captured and analyzed the biomechanics of Dickey’s primary (and weeeeeiiiiiirdddd) pitch, the knuckleball.

Digitizing Facial Movement During Singing : Build Progress

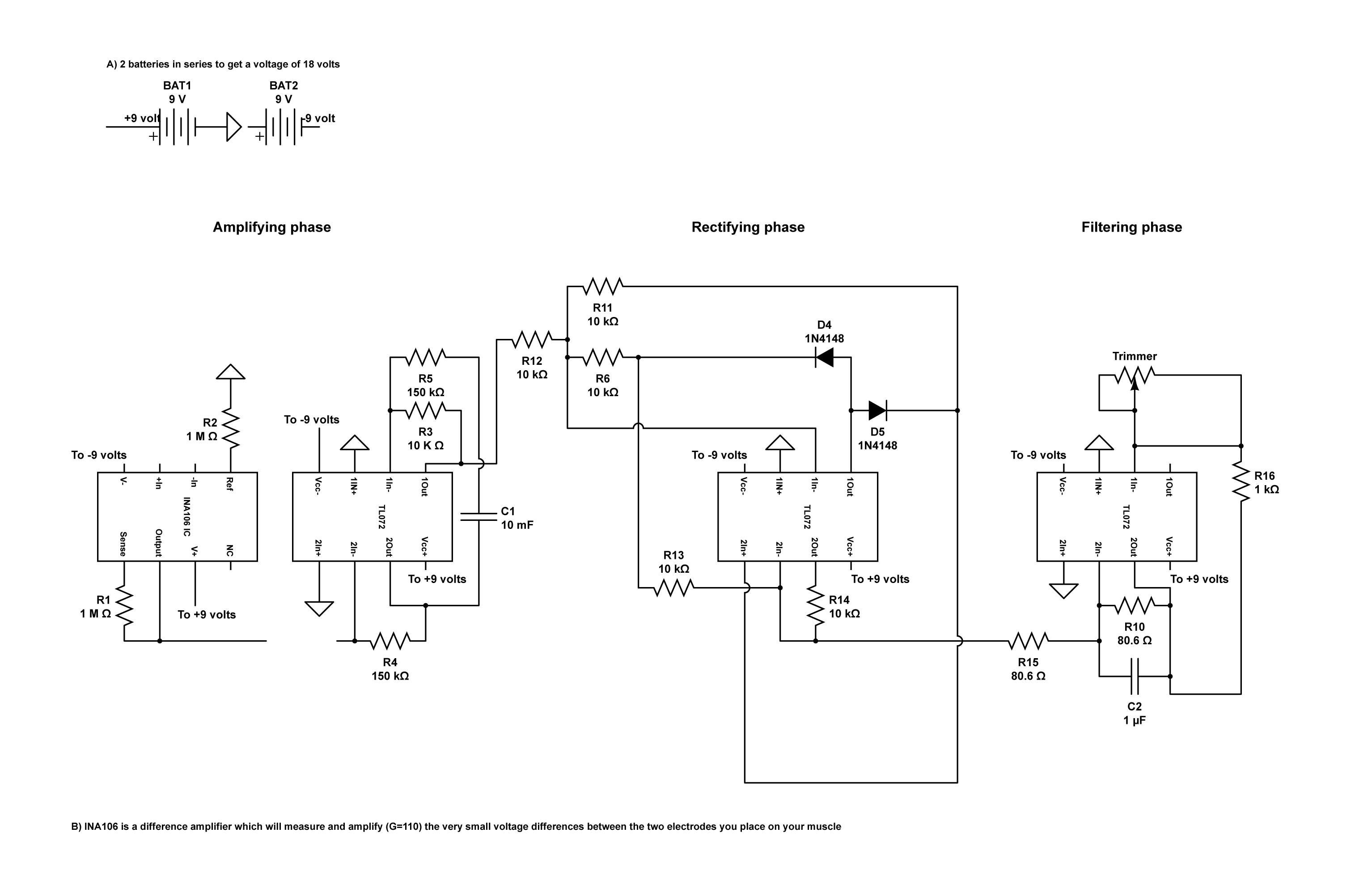

This week we focused primarily on gathering our remaining pieces (electrodes, missing chips) and entered round one of the build of physical parts of the testing apparatus—facial geometry element is ready. We had a bit of trouble getting just the right stuff (one of our circuit chips was not available for order and the BMP085 barometric pressure sensor arrived without a breakout board). That aside, we’re projecting to have the two missing elements with us by Friday. Until then we’re working on building out EMG to -> Arduino circuitry (sans remaining circuit chip):

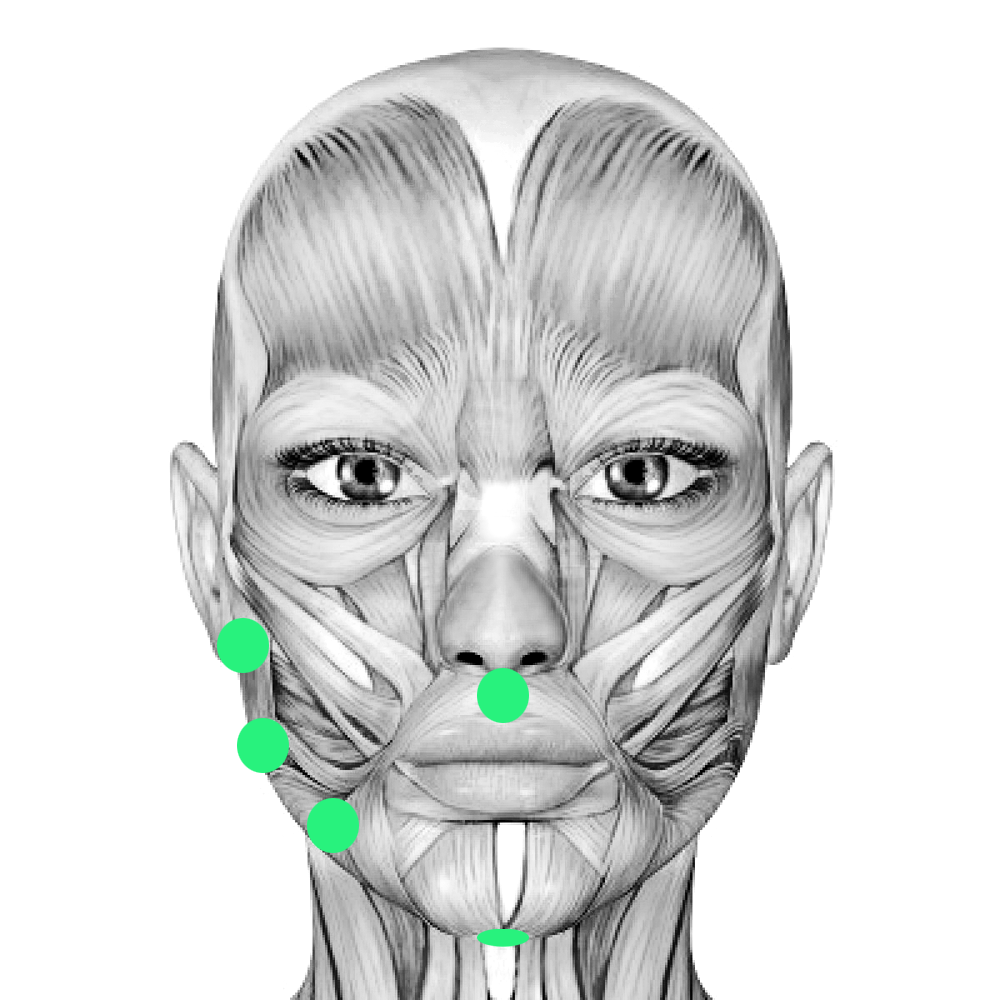

As well as writing preliminary code to read the two physical sensor systems (EMG & BMP085) and print muscle contractions and changes in air pressure and temperature. Much of the pressure print out code is taken from Jim Lindblom’s BMP085 Barometric Pressure Sensor Quickstart post on Sparkfun and much of the muscle contraction print out code is derived from Brian Kaminski’s USB Biofeedback Game Controller project guide on Instructables. We’ve refined the muscle sensors from five down to four, and with that, the muscle sensing will occupy analog pins 0 through 3 while the pressure/temperature sensor will occupy pins 4 and 5. Additionally we are struggling with determining where/how to find the Reference, Mid and End points of each facial muscle as we’ve never worked with EMGs before.

Code, thus far:

#include <Wire.h>

#define BMP085_ADDRESS 0x77

const unsigned char OSS = 0;

int ac1; int ac2; int ac3;

unsigned int ac4; unsigned int ac5; unsigned int ac6;

int b1; int b2; int mb; int mc; int md;

const int EMG1 = A0; const int EMG2 = A1; const int EMG3 = A2; const int EMG4 = A3;

int sensorValue1 = 0; int sensorValue2 = 0; int sensorValue3 = 0; int sensorValue4 = 0;

long b5;

void setup(){

Serial.begin(9600);

Wire.begin();

bmp085Calibration();

}

void loop()

{

float temperature = bmp085GetTemperature(bmp085ReadUT());

float pressure = bmp085GetPressure(bmp085ReadUP());

float atm = pressure / 101325;

sensorValue1 = analogRead(EMG1);

sensorValue2 = analogRead(EMG2);

sensorValue3 = analogRead(EMG3);

sensorValue4 = analogRead(EMG4);

Serial.print(“Temperature = “);

Serial.print(temperature, 2);

Serial.println(“deg C”);

Serial.print(“Pressure = “);

Serial.print(pressure, 0);

Serial.println(” Pa”);

Serial.print(“Standard Atmosphere = “);

Serial.println(atm, 4);

Serial.print(“Muscle 1 = ” );

Serial.println(sensorValue1);

Serial.print(“Muscle 2 = ” );

Serial.println(sensorValue2);

Serial.print(“Muscle 3 = ” );

Serial.println(sensorValue3);

Serial.print(“Muscle 4 = ” );

Serial.println(sensorValue4);

Serial.println();

delay(1000);

}

void bmp085Calibration()

{

ac1 = bmp085ReadInt(0xAA);

ac2 = bmp085ReadInt(0xAC);

ac3 = bmp085ReadInt(0xAE);

ac4 = bmp085ReadInt(0xB0);

ac5 = bmp085ReadInt(0xB2);

ac6 = bmp085ReadInt(0xB4);

b1 = bmp085ReadInt(0xB6);

b2 = bmp085ReadInt(0xB8);

mb = bmp085ReadInt(0xBA);

mc = bmp085ReadInt(0xBC);

md = bmp085ReadInt(0xBE);

}

float bmp085GetTemperature(unsigned int ut){

long x1, x2;

x1 = (((long)ut – (long)ac6)*(long)ac5) >> 15;

x2 = ((long)mc << 11)/(x1 + md);

b5 = x1 + x2;

float temp = ((b5 + 8)>>4);

temp = temp /10;

return temp;

}

long bmp085GetPressure(unsigned long up){

long x1, x2, x3, b3, b6, p;

unsigned long b4, b7;

b6 = b5 – 4000;

x1 = (b2 * (b6 * b6)>>12)>>11;

x2 = (ac2 * b6)>>11;

x3 = x1 + x2;

b3 = (((((long)ac1)*4 + x3)<<OSS) + 2)>>2;

x1 = (ac3 * b6)>>13;

x2 = (b1 * ((b6 * b6)>>12))>>16;

x3 = ((x1 + x2) + 2)>>2;

b4 = (ac4 * (unsigned long)(x3 + 32768))>>15;

b7 = ((unsigned long)(up – b3) * (50000>>OSS));

if (b7 < 0x80000000)

p = (b7<<1)/b4;

else

p = (b7/b4)<<1;

x1 = (p>>8) * (p>>8);

x1 = (x1 * 3038)>>16;

x2 = (-7357 * p)>>16;

p += (x1 + x2 + 3791)>>4;

long temp = p;

return temp;

}

char bmp085Read(unsigned char address){

unsigned char data;

Wire.beginTransmission(BMP085_ADDRESS);

Wire.write(address);

Wire.endTransmission();

Wire.requestFrom(BMP085_ADDRESS, 1);

while(!Wire.available());

return Wire.read();

}

int bmp085ReadInt(unsigned char address){

unsigned char msb, lsb;

Wire.beginTransmission(BMP085_ADDRESS);

Wire.write(address);

Wire.endTransmission();

Wire.requestFrom(BMP085_ADDRESS, 2);

while(Wire.available()<2);

msb = Wire.read();

lsb = Wire.read();

return (int) msb<<8 | lsb;

}

unsigned int bmp085ReadUT(){

unsigned int ut;

Wire.beginTransmission(BMP085_ADDRESS);

Wire.write(0xF4);

Wire.write(0x2E);

Wire.endTransmission();

delay(5);

ut = bmp085ReadInt(0xF6);

return ut;

}

unsigned long bmp085ReadUP(){

unsigned char msb, lsb, xlsb;

unsigned long up = 0;

Wire.beginTransmission(BMP085_ADDRESS);

Wire.write(0xF4);

Wire.write(0x34 + (OSS<<6));

Wire.endTransmission();

delay(2 + (3<<OSS));

msb = bmp085Read(0xF6);

lsb = bmp085Read(0xF7);

xlsb = bmp085Read(0xF8);

up = (((unsigned long) msb << 16) | ((unsigned long) lsb << 8) | (unsigned long) xlsb) >> (8-OSS);

return up;

}

void writeRegister(int deviceAddress, byte address, byte val) {

Wire.beginTransmission(deviceAddress);

Wire.write(address);

Wire.write(val);

Wire.endTransmission();

}

int readRegister(int deviceAddress, byte address){

int v;

Wire.beginTransmission(deviceAddress);

Wire.write(address);

Wire.endTransmission();

Wire.requestFrom(deviceAddress, 1);

while(!Wire.available()) {

//waiting

}

v = Wire.read();

return v;

}

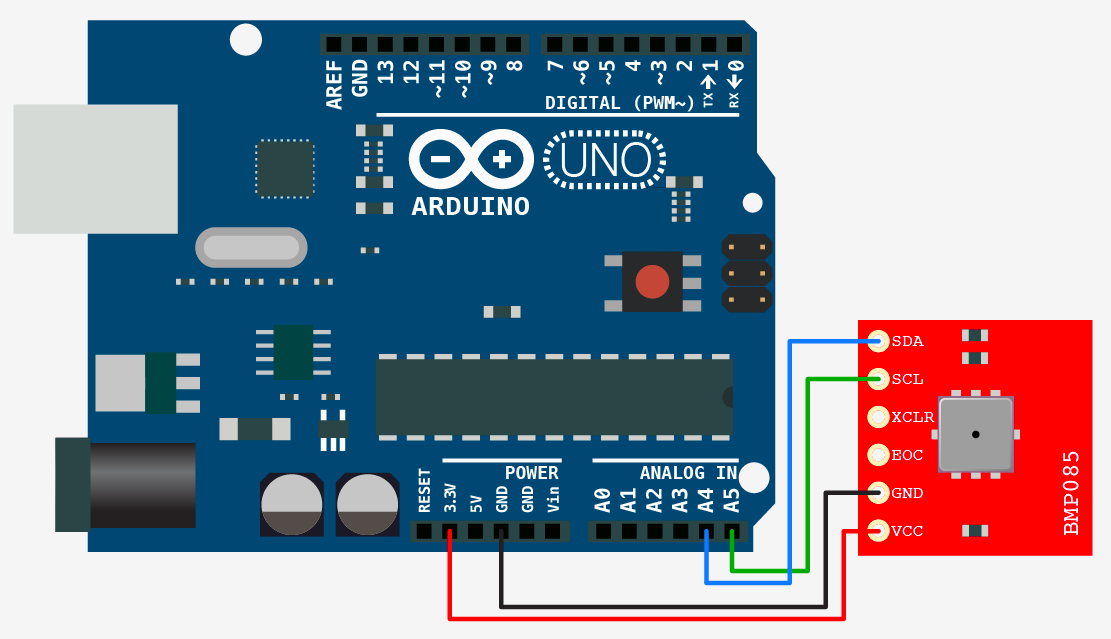

Those issues established, build for the pressure sensor is super minimal and does not require any extensive set up before we receive the breakout board. BMP085 set up diagram below:

Digitizing Facial Movement During Singing

Digitizing Facial Movement During Singing : Prototype

We will be using a three-part apparatus for tracking facial movements during simple singing: a barometric pressure sensor to monitor airflow, electromyography (EMGs) to monitor extrinsic facial muscles and a camera to track facial movement. While this data feeds in we will be manually recording fluctuations at different frequencies. Specially, the aim is to record changes in pressure, muscle contractions and position for each note in one octave—comparing to the face’s resting state. Ideally, data mining will be performed on at least five professional vocalists.

The barometric pressure sensor will be placed in front of the text subject, discretely mounted to a tiny stand. The EMGs will be placed (via medical adhesive) to the extrinsic muscles of the larynx involved in articulation; relevant muscles include the orbicularis oris (lips), geniohyoid (tongue and lower jaw), mylohyoid (mandible), masseter (cheek and jaw) and the hyoglossus (tongue). And finally, facial movement and geometry will be monitored using a Kinect.

Digitizing Facial Movement During Singing : Sensor Assembly

Pressure Sensor Circuit Diagram:

[IMAGE]

DIY Electromyography Circuit Diagram & EMG Map:

FaceOSC/Syphon/Processing Source Code:

import codeanticode.syphon.*;

import oscP5.*;

PGraphics canvas;

SyphonClient client;

OscP5 oscP5;

Face face = new Face();

public void setup() {

size(640, 480, P3D);

println(“Available Syphon servers:”);

println(SyphonClient.listServers());

client = new SyphonClient(this, “FaceOSC”);

oscP5 = new OscP5(this, 8338);

}

public void draw() {

background(255);

if(client.available()) {

canvas = client.getGraphics(canvas);

image(canvas, 0, 0, width, height);

}

print(face.toString());

}

}

void oscEvent(OscMessage m) {

face.parseOSC(m);

}

+ LINK to the Face class.

Digitizing Facial Movement During Singing : Troubleshooting

Currently, we’ve only run real tests on the FaceOSC/Syphon/Processing facial geometry detector. We are able to mine the following data (seen in the above screen capture):

pose

scale: 3.8911576

position: [ 331.74725, 153.13004, 0.0 ]

orientation: [ 0.107623726, -0.06095604, 0.085640974 ]

gesture

mouth: 14.871553 4.777506

eye: 2.649438 2.6013117

eyebrow: 7.41446 7.520543

jaw: 24.912415

nostrils: 5.7812777

Considering that we’re using the on-camera feed to track geometric displacement in the face (relating to muscle movement), only the data in the “gesture” category really applies—categories with two values are recording x and y fluctuation. Fortunately, scale, position and orientation do not bias the gesture readings. Unfortunately, there is a fair bit of natural fluctuation in the readings. We’ve gathered that fluctuations of over 0.5 are actual movement while those under 0.5 are natural oscillations in the camera’s reading.

Digitizing Facial Movement During Singing : Next Steps

With that established, our current plan of action is as follows: physical assembly of the remaining two parts in the three-part testing apparatus, testing and continued troubleshooting, data recording, pinpointing connections in data (relationship between movement/muscle/airflow and pitch), and then finally, apply the found data relationships to an application that generates sound via our sensing apparatus.

Fun Stuff: Reddit and Biomechanics

Stellar thread from the front page yesterday: If you were task to redesign the human body, What would you change?

There’s actually some super interesting biomechanics musings.

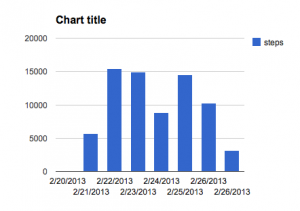

FitBit Vis

Trollin’ for Biomechanics topics

I’ve narrowed down (tough, it was) my research options to the following three options:

1) Taking on and readdressing Formosa’s “tools for women” effort.

Why? Because a closest friend of mine is an OB/gyn (specializing in providing abortions in rural regions normally lacking in such resources) and despite Formosa’s arguments about small women and large utensils in hospital work, she notes little worry to said topic. Most of her technological/instrument woes lean toward patient compliance and fit.

2) A machine that uses flex sensors to detect movement and change in facial muscles/mechanics, output TBD.

Why? Because I’m absolutely fascinated by the physics of sound, the origin of the phonetic alphabet (Phoenician/Greek war tool later adapted by the Romans), and the mechanics of singing.

3) Sensory Swapping for the deaf.

Why? My undergraduate thesis was an animated redesign of closed captioning for the deaf and hard of hearing. It demanded captions that were in time with the film sounds; sensitive to volume, intonation, cadence and semantics; and provided animators with a package of animation algorithms for specific common emotions/sounds.

Thus far I’ve just being openly and freely pursuing, the three topics without any direct initiative for a final product, just to get my feet wet, get excited and narrow the decision-making process.

RESEARCH PROGRESS :

1) One quick discussion has been had with the renegade abortionist, leading me in the direction of looking into how patients fit the tools that doctors use rather than how the tools fit the doctors.

2) By far the most promising topic thus far. Found two exceptional pieces on not just the muscles of the face but the muscular and structural mechanics of speech AND two solid journal articles about digitizing facial tracking. I’m not sure exactly what I can do with the data from examining facial muscle movement during speech but I feel like this subject potentially has a LOT of depth and the opportunity to combine with subject three. After reading the better part of The Mechanics of the Human Voice, and being inspired by the Physiology of Phonation chapter, I’m now considering developing a system of sensors (most likely flex sensors and a camera, and/or something that can track vocal cord movement) to log the muscle position of singers during different styles and pitches in an attempt to funnel the data into an audio output that translates the sound. For example, is there a particular and common muscle contraction/extension that occurs during high C? The computer sees that contraction and plays the note.

3) Read a few chapters of David Eagleman’s Incognito: The Secret Lives of the Brain. The book is both wonderful and misleading. Toting itself as an enlightening journey through the brain, it eventually reveals its true agenda:an argumentative piece angled to sway common folk into reconsidering their opinions on criminal law and sentencing. Regardless, the Testimony of the Senses chapter does an incredible job of describing how little data (by comparison to what’s out there) our sensory sensors actually pull in and underlining the fact that it really just is data by referencing the effect of sensory innovations on people like Mike May (a man who lost his sight at age 3, regained it, and could not reconcile the meaning of visual data for a fairly long time) and Eric Weihenmayer (an extreme rock climber who uses a sonar sensor connected to a grid on his tongue to “see” mountains during unassisted climbs).

Open Access for Government Research

Before posting my research from this week, I really wanted to share this (as scholarly journal accessibility/exclusivity has been a fairly popular topic in class):

White House directs open access for government research

Annnd Friday’s Office of Science and Technology Policy statement: Expanding Public Access to the Results of Federally Funded Research

We made it! It’s better! Buy it!

It seems obvious—via the quantitative results of Figures 5 and 6—that Syringe R requires less newtons of isometric force to be exerted on the plunger. AWESOME. WIN. IT WORKS. But are we supposed to consider that the end? The cure? I read through this article with tinges of skepticism.

First, this is essentially an advertisement, no?

Second, how did Smart Design arrive at this syringe redesign in the first place? I’m not suggesting that the design was ill-informed, but rather that I’m just plain curious; I want to know the originating design factors and inspirations before I read test results!

Or, how the hell do people with rheumatoid arthritis get the tourniquet on? Did anyone else wonder this? It’s a critical step in self-injection of any kind. Leaps and bounds in syringe design are left meaningless if the patient still painfully struggles to prep the injection site. They touched on common RA injection sites (leg, abdomen) but did not address tourniquet use.

Also, the article opens with a simple declaration: shifting to self-administration in RA treatment means pursuing more thorough understanding of the patient’s ability to self-inject. The researchers acknowledged that there are variations in injection abilities by enlisting a test group of subjects with distinctly varying disease severity. GREAT. But they left out many qualitative factors: they neglected to touch on variation in syringe / medicine distribution comfort / experience. This is critical. They also neglected to survey the subjects’ previous injection preference (auto injector VS pre-filled syringe). Bringing me to my next question: how could this data be used to draft a more ergonomic auto-injector? Because, despite not being mentioned in this study, many clinical trials ( eg. http://clinicaltrials.gov/ct2/show/NCT00094341 ) have suggested that auto-injection is currently preferred. Is it not a more ideal option to pursue the development of an apparatus that more closely resembled the “pager pumps” used by diabetics? A system which assumes near-full integration with the body.

In the end, the article felt like an over-simplified advertisement for a Smart Design product under the guise of a published journal article. … But what do I know?

AFTERTHOUGHT:

Why was feminine/masculine one of the design attributes? If this relevant? I thought Dan Formosa was about functionality for all genders not gendering product?

Joint Color Tracker

A cluster of circle that are attracted to an on-screen color, in this case, red (calibration to color via my lipstick because it was the easiest thing to smear on my face contrast to my green-ish surroundings). The idea was to track acceleration and drag in the elbow joint’s extension.

If a color is left on screen for enough time to feel nature, the circles cluster around the color. If the color then moves the circles follow, sensitive to physical properties (friction, bounds of the screen, acceleration of the motion). I used this to abstractly track the movement of my elbow, concept diagram below.

import processing.video.*;

Mover[] movers = new Mover[100];

Attractor a;

Capture cam;

color trackColor;

void setup() {

size(600, 400);

smooth();

for (int i = 0; i < movers.length; i++) {

movers[i] = new Mover(random(0.1, 2), random(width), random(height));

}

a = new Attractor();

String[] cameras = Capture.list();

for (int i = 0; i < cameras.length; i++) {

}

cam = new Capture(this,cameras[0]);

cam.start();

trackColor = color(90,25,40);

}

void draw() {

background(255,0);

a.display();

for (int i = 0; i < movers.length; i++) {

PVector force = a.attract(movers[i]);

movers[i].applyForce(force);

movers[i].boundaries();

movers[i].update();

movers[i].display();

}

}

class Attractor {

float mass;

float G;

PVector location;

Attractor() {

location = new PVector(width/2,height/2);

mass = 20;

G = 2;

}

PVector attract(Mover m) {

PVector force = PVector.sub(location,m.location);

float d = force.mag();

d = constrain(d,5.0,25.0);

force.normalize();

float strength = (G * mass * m.mass) / (d * d);

force.mult(strength);

return force;

}

void display() {

if (cam.available() == true) {

cam.read();

}

cam.loadPixels();

image(cam,0,0);

float worldRecord = 500;

int closestX = 0;

int closestY = 0;

for (int x = 0; x < cam.width; x ++ ) {

for (int y = 0; y < cam.height; y ++ ) {

int loc = x + y*cam.width;

color currentColor = cam.pixels[loc];

float r1 = red(currentColor);

float g1 = green(currentColor);

float b1 = blue(currentColor);

float r2 = red(trackColor);

float g2 = green(trackColor);

float b2 = blue(trackColor);

float d = dist(r1,g1,b1,r2,g2,b2);

if (d < worldRecord) {

worldRecord = d;

closestX = x;

closestY = y;

}

}

}

if (worldRecord < 50) {

fill(90,25,40,0);

strokeWeight(4.0);

stroke(0);

ellipse(closestX,closestY,mass*2,mass*2);

}

}

}

class Mover {

PVector location;

PVector velocity;

PVector acceleration;

float mass;

Mover(float m, float x, float y) {

mass = m;

location = new PVector(random(width), random(height));

velocity = new PVector(1.5, 0);

acceleration = new PVector(0, 0);

}

void applyForce(PVector force) {

PVector f = PVector.div(force, mass);

acceleration.add(f);

}

void update() {

velocity.add(acceleration);

location.add(velocity);

acceleration.mult(0);

}

void display() {

stroke(255);

strokeWeight(0);

fill(255,50);

ellipse(location.x, location.y, mass*10, mass*10);

}

void boundaries() {

float d = 50;

PVector force = new PVector(0, 0);

if (location.x < d) {

force.x = 1;

}

else if (location.x > width -d) {

force.x = -1;

}

if (location.y < d) {

force.y = 1;

}

else if (location.y > height-d) {

force.y = -1;

}

force.normalize();

force.mult(0.1);

applyForce(force);

}

}

http://itp.nyu.edu/~mc4562/noc/colourfollow/VideoAttractor.pde