Expressivity in human motion is traditionally described in qualitative terms. In biomechanical terms it is most often analyzed quantitatively as the movement of joints through space. However, by inspecting the biomechanic “cost” of bodily motion we may be able to describe the expressive (and affective) content of non-verbal communication between humans in even richer quantitative terms.

Expressive comes from a Latin root meaning to press out. That is to say, even the etymology of expressive contains some idea of a force necessary to convey a thought or feeling. A review of the relevant literature certainly reveals that expressivity has been analyzed from the standpoint of biomechanics. However, that said, the existing work seems to be concerned with kinematics and describing the nature of the movement of limbs and joints through space. Typically, this work is concerned with:

- Frameworks for describing motion often borrowing from theater and dance

- Categorizations of emotion exhibited in movement

- Mapping human motion to animation.

Further, the existing work looks at individuals. Conversely, work involving two or more humans tends to take the form of gesture studies of the non-verbal language between individuals without a biomechanical perspective. Finally, if these motions are measured and recorded, expensive motion capture systems are typically employed.

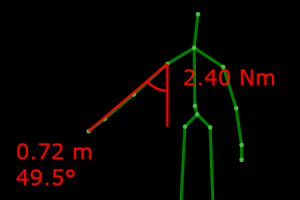

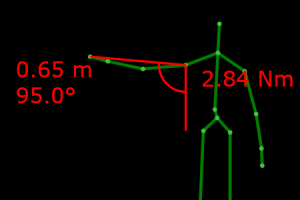

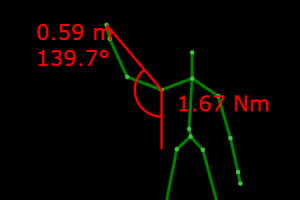

My proposed exploration of this area will take a number of different approaches. I propose examining expressivity as a biomechanical phenomenon incorporating not only kinematics but also a simple kinetic model of human motion. Specifically, the goal is to correlate emotional “force” with the forces involved in expressive gestures — i.e. bigger emotional displays require bigger biomechanical forces. Any such expressive gestural displays are most typically found in the interactions of two or more people. Consequently, this work will look at non-verbal gesturing between individuals. Lastly, where fine-grained motion analysis with expensive equipment is typical of the existing approaches, this approach will use coarse-grained analysis by way of inexpensive depth cameras such as the Kinect.

Works Consulted:

- Behavioral Biomechanics Lab, Department of Kinesiology, University of Michigan

The Behavioral Biomechanics Lab studies the connection of emotional expression and biomechanics. The lab’s stated purpose and its project list (http://www.sitemaker.umich.edu/mgrosslab/projects) make plain the emphasis on only the kinematics of emotion in individuals. Their linked work reveals a heavy reliance on elaborate motion capture systems.

- Crane, Elizabeth, and Melissa Gross. “Methodological Considerations for Quantifying Emotionally Expressive Movement Style.” Ann Arbor 1001 (2007): 48109-2013.

The authors (a part of the Behavioral Biomechanics Lab at the University of Michigan) note that whole body movement analysis is difficult and methods for analyzing it often rely on coding rather than quantitative methods. The authors’ solution to this problem is to use motion capture systems to analyze the kinemtics of body movement. The paper discusses many methodological issues in yielding good motion capture from test subjects.

- Pelachaud, Catherine, et al. “Expressive Gestures Displayed by a Humanoid Robot during a Storytelling Application.” New Frontiers in Human-Robot Interaction (AISB), Leicester, GB (2010).

Here the authors describe early work to present stories read by a robot in a physically expressive manner complementary to the story. Ultimately, the authors develop a description framework from a video corpus of story readers that allows a robot to mimic human expressiveness. The approach regards expressivity as a matter of replicating kinematics.

- Hertzmann, Aaron, Carol O’Sullivan, and Ken Perlin. “Realistic human body movement for emotional expressiveness.” ACM SIGGRAPH 2009 Courses. ACM, 2009.

The authors present an overview of the issues of expressiveness in animation as a course for SIGGRAPH 2009. While they largely cover many issues already noted elsewhere in this review, they touch on the early failures and current progress towards physics-based (i.e true biomechanical) character animation. Rather than simply replaying the kinematics of a motion capture (with some latitude) a fully physics-based character could move through space as a body truly would regardless of circumstance. The authors present small mathematical models necessary for such animation.

- Meyerhold’s Biomechanics

Meyerhold developed a method of actor training he called Biomechanics (only loosely related to the engineering discipline). The practice and training is built around the interrelation of psychology and physiology to aid in emotional expression on stage.

- Chi, Diane M. A motion control scheme for animating expressive arm movements. Diss. University of Pennsylvania, 1999.

This dissertation evaluates existing biomechanical models as far too limited to address the fine aspects of human motion for use in analyzing and replicating expressive movement. Consequently, the author chooses to draw from artistic approaches to movement ultimately proceduralizing components of Laban Movement Analysis to produce expressive arm movements in an animated figure.